- February 20, 2026

- Career

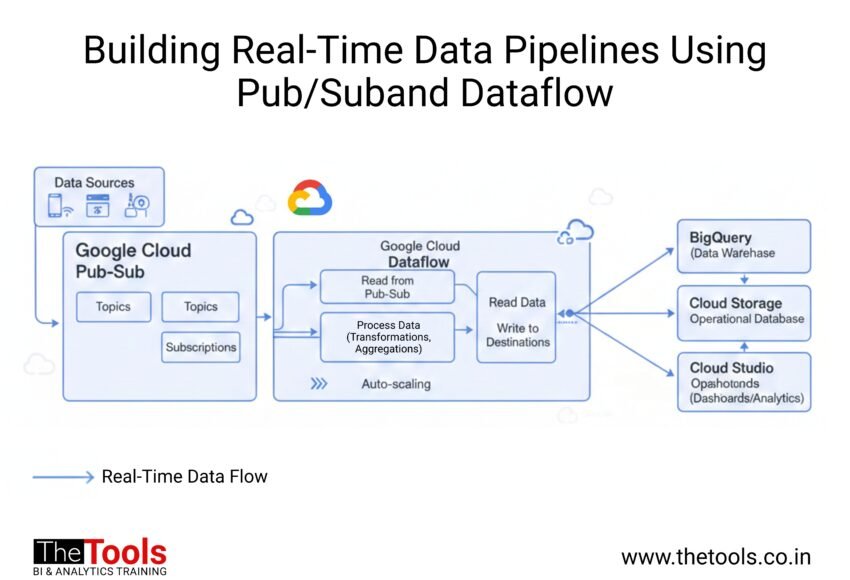

Building Real-Time Data Pipelines Using Pub/Suband Dataflow

In the modern data-driven world, organizations are inundated with massive volumes of data generated every second. From social media interactions to IoT sensor readings, this continuous stream of data holds immense value if processed and analyzed promptly. This is where real-time data pipelines come into play.

A data pipeline is a sequence of data processing steps where data is ingested, transformed, and delivered to a target system for analysis or storage. Unlike traditional batch pipelines, real-time pipelines process data as it arrives, enabling immediate insights and faster decision-making.

Why Real-Time Pipelines Are Essential

- Fraud Detection: Monitoring transactions instantly to flag suspicious activities.

- IoT Monitoring: Collecting sensor data to trigger alerts or control systems in real time.

- Personalized Marketing: Delivering tailored recommendations based on live user behavior.

- Operational Monitoring: Tracking system health and logs continuously.

Despite their advantages, real-time pipelines must address challenges like data volume spikes, event ordering, latency minimization, fault tolerance, and schema changes. Cloud-native services like Google Cloud’s Pub/Sub and Dataflow abstract much of this complexity.

Overview of Google Cloud Pub/Sub and Dataflow

What is Google Cloud Pub/Sub?

Google Cloud Pub/Sub is a messaging service that facilitates asynchronous communication between independent systems using the publish-subscribe messaging pattern.

- Topics act as named channels where messages are published.

- Subscriptions receive messages from topics.

- At-least-once delivery guarantees reliability.

- Automatic scalability ensures seamless growth.

What is Google Cloud Dataflow?

Dataflow is a serverless stream and batch processing service built on Apache Beam. It manages autoscaling, provisioning, and fault tolerance automatically.

- Supports both batch and stream processing.

- Advanced windowing and triggering mechanisms.

- Integration with BigQuery, Cloud Storage, Bigtable.

- Built-in monitoring and logging.

Understanding Real-Time Messaging with Pub/Sub

Key Features

- Message Durability: Messages stored until acknowledged.

- At-least-once Delivery: Ensures reliability.

- Ordering: Ordered delivery per key.

- Pull vs Push: Flexible delivery models.

- Filtering: Subscription-level filtering.

Message Flow

- Publishers send messages to a topic.

- Pub/Sub stores messages until acknowledged.

- Subscribers receive messages.

- Messages are acknowledged after processing.

Designing Data Pipelines with Dataflow

Core Concepts

- PCollections: Datasets flowing through pipeline.

- Transforms: ParDo, GroupByKey, Combine.

- Sources/Sinks: Pub/Sub, BigQuery.

Windowing Types

- Fixed Windows

- Sliding Windows

- Session Windows

Building a Real-Time Data Pipeline

gcloud pubsub topics create sensor-data-topic gcloud pubsub subscriptions create sensor-data-subscription --topic=sensor-data-topic --ack-deadline=30

Example: Apache Beam (Python)

import apache_beam as beam

from apache_beam.options.pipeline_options import PipelineOptions

class ParseMessage(beam.DoFn):

def process(self, element):

yield element

with beam.Pipeline() as pipeline:

(pipeline

| 'Read' >> beam.io.ReadFromPubSub(subscription='your-subscription')

| 'Write' >> beam.io.WriteToBigQuery('dataset.table'))

Security and Compliance

- Use Cloud KMS for encryption.

- Apply least-privilege IAM policies.

- Enable audit logs.

- Anonymize sensitive data.

Real-World Use Cases

- IoT Analytics

- Fraud Detection

- Sentiment Analysis

Future Trends

- Serverless-first architectures

- AI-driven streaming analytics

- Edge + Cloud integration

Additional Resources

- Pub/Sub Quotas and Limits

- Apache Beam Windowing Guide

- Dataflow Best Practices

- Google Cloud Security Overview

- Google Cloud BigQuery Documentation